python爬虫不可不知的10个高效数据清洗方法(三)

耐心看完本系列教程有收获,至少可以应对市面上90%的数据清洗场景。

7. GNE: 通用新闻网站正文抽取器(GeneralNewsExtractor)

有一天,小编接到一个任务,老板丢了一个excel文件给我,里面有密密麻麻的行,每一行是一个网页URL。老板说,这里的网站都是竞品的新闻发布稿,小玉啊,你帮我把每一个网址里的内容帮我整理汇总出来,我要对比咱们产品与市面上的竞品的优劣势。

刚坐到工泡好一壶茶,准备今天的摸鱼的我,突然接到老板这样一个任务。excel里面好几百个网站URL,而且老板让我每天下班前汇报一下进度。

即使手速再快,不断ctrl-c,ctrl-v复制网站里面新闻稿,一天也搞不完几百个网站呀。

对面工位的新人是位刚刚毕业的大学生,作为一名python程序员入职公司不到一个月。他看到我的唉声叹气之后,丢了3个字给我:GNE

于是我顺藤摸瓜,找到了这么一个优秀的通用新闻网站正文清洗库。我花了半天的时间看完文档,然后剩余的半天时间,利用刚刚所学的GNE技能,把几百个站点的内容给整理出来了。本来预计1周的工作量,硬生生被我一天内就完成了。 接下来我就介绍一下这个数据清洗库的用法。

GeneralNewsExtractor(GNE)是一个通用新闻网站正文抽取模块,输入一篇新闻网页的 HTML, 输出正文内容、标题、作者、发布时间、正文中的图片地址和正文所在的标签源代码。GNE在提取今日头条、网易新闻、游民星空、 观察者网、凤凰网、腾讯新闻、ReadHub、新浪新闻等数百个中文新闻网站上效果非常出色,几乎能够达到100%的准确率。

下面介绍一GNE的用法。

使用环境:python3

使用教程

安装 GNE

# 以下两种方案任选一种即可

# 使用 pip 安装

pip install --upgrade gne

# 使用 pipenv 安装

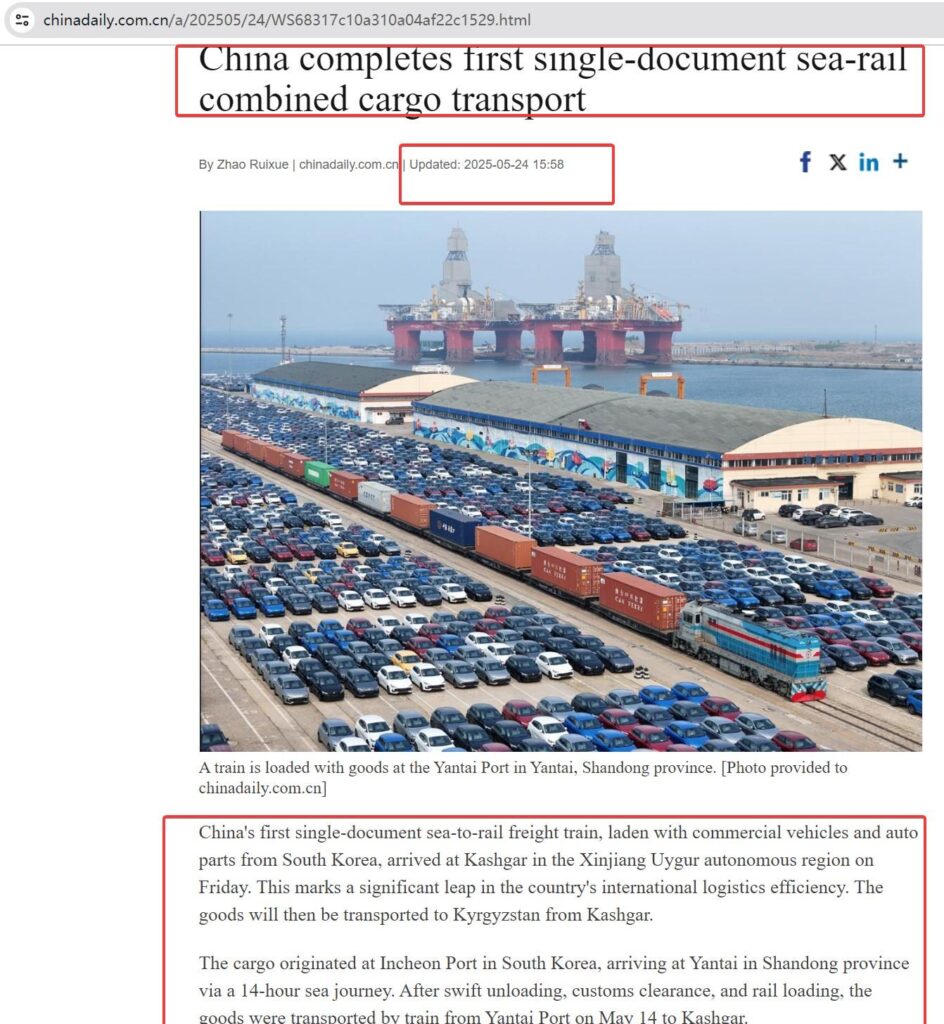

pipenv install gneCode language: PHP (php)使用方式也非常简单,目标站点比如,ChinaDaily

URL:https://www.chinadaily.com.cn/a/202505/24/WS68317c10a310a04af22c1529.html

咋们的任务是把标题,正文的文字内容,发布时间提取出来。

首先是获取网页的HTML源码,这一步简单。

import requests

url = "https://www.chinadaily.com.cn/a/202505/24/WS68317c10a310a04af22c1529.html"

def crawl(url):

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;',

'Referer': 'https://www.chinadaily.com.cn/',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/126.0.0.0 Safari/537.36',

}

response = requests.request("GET", url, headers=headers)

return response.textCode language: JavaScript (javascript)如果遇到有反爬网站,比如封IP,可以尝试接入代理。参考:住宅IP代理:独享代理

然后把获取到的HTML源码,放入GNE中进行提取。

from gne import GeneralNewsExtractor

extractor = GeneralNewsExtractor()

html = crawl(url)

result = extractor.extract(html)

print('Title --> ', result['title'], '\n')

print('Content -->', result['content'], '\n')

print('PublishTime -->', result['publish_time'])Code language: PHP (php)输出的结果:

Title --> China completes first single-document sea-rail combined cargo transport

Content --> A train is loaded with goods at the Yantai Port in Yantai, Shandong province. [Photo provided to chinadaily.com.cn]

China's first single-document sea-to-rail freight train, laden with commercial vehicles and auto parts from South Korea, arrived at Kashgar in the Xinjiang Uygur autonomous region on Friday. This marks a significant leap in the country's international logistics efficiency. The goods will then be transported to Kyrgyzstan from Kashgar.

The cargo originated at Incheon Port in South Korea, arriving at Yantai in Shandong province via a 14-hour sea journey. After swift unloading, customs clearance, and rail loading, the goods were transported by train from Yantai Port on May 14 to Kashgar.

The single-document system allows seamless coordination between international shipping and domestic rail transport under one unified freight document, eliminating excessive paperwork. The total transit time from South Korea to Kyrgyzstan is slashed from 25 days to 12.

Previously, fragmented cross-border shipments from Japan and South Korea required logistics firms to arrange separate contracts with domestic carriers, often forcing small-scale cargo to wait for consolidation. This led to prolonged waits, cumbersome procedures, and tracking difficulties.

"By integrating rail and port authorities, we ensure goods are loaded within one hour of arrival and dispatched within three hours," said Yu Qunjie, director of the production and dispatching command center at Yantai Railway Station of China Railway Jinan Group.

PublishTime --> 2025-05-24Code language: JavaScript (javascript)可以看到,输入的是一对HTML源码,里面嵌套了js,html代码,广告,浮动元素,正文,而GNE可以把内容正确提取出来。

它返回的格式是这样的:

{"title": "xxxx", "publish_time": "2019-09-10 11:12:13", "author": "yyy", "content": "zzzz", "images": ["/xxx.jpg", "/yyy.png"]}Code language: JSON / JSON with Comments (json)images字段是提取的新闻内容里的遇到的图片url。

- 如果标题自动提取失败了,你可以指定 XPath:

from gne import GeneralNewsExtractor

extractor = GeneralNewsExtractor()

html = 'Your HTML source code'

result = extractor.extract(html, title_xpath='//h5/text()') # 在这里指定标题的xpath

print(result)Code language: PHP (php)- 如果网页里有那种评论,那么评论有可能出现很长的文字密度,会对新闻正文的提取造成一定的干扰。可以加入排除干扰的xpath

html = 'Your HTML source code'

result = extractor.extract(html, noise_node_list=['//div[@class="comment-list"]']) # 在这里添加过滤的noise节点xpath

print(result)Code language: PHP (php)注意事项

- 本项目的输入 HTML 为经过 JavaScript 渲染以后的 HTML,而不是普通的网页源代码。

如果是前后端分离的项目,那么清洗就更简单,可以使用上一篇介绍的直接获取json数据的方法。高效数据清洗方法(二): JSON数据清洗

GNE的库对新闻类别的网页的内容提取准确可达到90%以上。不过GNE没有内置爬虫功能,它重点在于处理HTML文本。

8. newspaper

相比GNE这个库,newspaper是一个内置了爬虫功能的正文提取工具,更能更加强大。

而且newspaper库要比GNE更早发布,在python2的时候已经存在了。而在python3的环境中,安装命令如下:

pip install newspaper3k目标网站URL:http://www.cnn.com/2013/11/27/justice/tucson-arizona-captive-girls/

from newspaper import Article

url = 'http://www.cnn.com/2013/11/27/justice/tucson-arizona-captive-girls/'

article = Article(url)

article.download()

article.parse()

print('Author -->',article.authors)

print('Publish date -->',article.publish_date)

print('Article --> ',article.text)Code language: PHP (php)从上面的代码可以看到,省去了自己写爬虫的步骤。

输出结果

Author --> ['Eliott C. Mclaughlin']

Publish date --> 2013-11-27 00:00:00

Article --> Story highlights "What the kids are telling us, it was 24-7 either loud music or static," the police chief says Girls said they ran to a neighbor's house to escape their knife-wielding stepfather, a report says They tell police they may have been held captive for as long as two years, report says Parents face charges of child abuse, kidnapping; stepfather faces sex abuse count

# 省略若干Code language: PHP (php)而且newspaper可以提取文章中的所有的URL,然后继续遍历。

下面的几行代码,就可以网站里的所有的文章内容给解析出来。

import newspaper

cnn_paper = newspaper.build('https://cnn.com')

for article in cnn_paper.articles:

url = article.url

print(url)

article = newspaper.Article(url)

article.download()

article.parse()

'''

print('Author -->',article.authors)

print('Publish date -->',article.publish_date)

print('Article --> ',article.text)

'''Code language: PHP (php)输出

https://www.cnn.com/business/media

https://www.cnn.com/weather/video

https://www.cnn.com/subscription/video/flashdocs/library

https://www.cnn.com/audio/podcasts/all-there-is-with-anderson-cooper

https://www.cnn.com/2025/05/24/business/companies-raise-prices-trump-tariffs

http://cnn.com/2025/05/24/business/companies-raise-prices-trump-tariffs

http://cnn.com/2025/05/23/economy/trump-eu-tariffs

http://cnn.com/2025/05/23/economy/trump-threatens-tariff-apple

http://cnn.com/2025/05/23/business/video/trump-threaten-apple-with-tariff-bessent-digvid

http://cnn.com/2025/05/23/business/us-steel-nippon-trump

http://cnn.com/2025/05/23/politics/video/kaitlan-collins-week-recap-trump-ramaphosa-rfk-jr-budget-cuts-src-digvid

http://cnn.com/2025/05/24/middleeast/israel-pressure-from-allies-war-gaza-intl-cmd

http://cnn.com/2025/05/24/us/blm-plaza-george-floyd-washington-dc

http://cnn.com/2025/05/24/us/affinity-graduation-college-dei-trump

http://cnn.com/2025/05/23/world/harvard-international-students-trump-intl

http://cnn.com/2025/05/24/world/video/harvard-escobar-intv-fst-052306pseg2-cnni-us-fast

http://cnn.com/2025/05/22/us/harvard-university-trump-international-students

http://cnn.com/2025/05/22/us/international-students-harvard-trump-administration

http://cnn.com/2025/05/24/politics/house-tax-spending-cuts-bill-explained

http://cnn.com/politics/live-news/trump-presidency-news-05-24-25#cmb2mtibd000l3b6n3el7bf94

http://cnn.com/2025/05/23/politics/food-assistance-gop-big-beautiful-bill-cbo

http://cnn.com/2025/05/23/politics/video/trump-economy-national-debt-plan-fiscal-policy-mattingly-vrtc

http://cnn.com/2025/05/22/economy/trump-tax-bill-debt-deficit

http://cnn.com/2025/05/23/us/video/titan-submersible-oceangate-bang-vrtc

http://cnn.com/2025/05/24/us/video/harvard-international-students-ban-vrtc

http://cnn.com/2025/05/22/politics/video/trump-body-language-vrtc

http://cnn.com/2025/05/23/politics/video/trump-apple-tariffs-iphone-vrtc

http://cnn.com/2025/05/24/entertainment/video/kermit-the-frog-tea-meme-sidner-vrtc

http://cnn.com/2025/05/23/us/video/billy-joel-brain-disorder-diagnosis-hydrocephalus-explained-dr-sanjay-gupta-vrtc

http://cnn.com/2025/05/23/us/video/san-diego-jet-crash-muntean-vrtc

http://cnn.com/2025/05/23/world/video/trump-south-africa-congo-vrtc

http://cnn.com/2025/05/23/business/video/trump-apple-tariff-bessent-digvid-vrtcCode language: JavaScript (javascript)有了这个工具,对于小白用户,想要大批量提取网页正文内容,写几行代码,易如反掌了。

比如把上面的提取的数据保存为excel文件,

先按照pandas库

pip install pandas然后把解析到的数据保存为list,转化为dataframe,最后to_excel一个函数就搞定了。

import pandas as pd

import newspaper

cnn_paper = newspaper.build('https://cnn.com')

article_list = []

for article in cnn_paper.articles:

url = article.url

article = newspaper.Article(url)

article.download()

article.parse()

print('Author -->',article.authors)

print('Publish date -->',article.publish_date)

print('Article --> ',article.text)

article_obj = {

'url': url,

'author': article.authors,

'publish_date': article.publish_date,

'text': article.text

}

article_list.append(article_obj)

df = pd.DataFrame(article_list)

df.to_excel('cnn_articles.xlsx', index=False)Code language: PHP (php)等待一段时间的爬取,就会本地目录生成一个cnn_articles.xlsx的excel文件啦。